Insight Columns

Scaling comparative company analysis with AI.

Role

Lead Product Designer

Time Frame

2 sprints (roughly 6 weeks)

Context

Our team had built an LLM chatbot, ChatCBI, that had turned out to be hugely popular with our customer base. However, as with all LLM answers the problem came when wanting to scale standardized analysis across more than 15-20 companies.

Leadership initially approach the team asking us to build a more advanced data table inside of ChatCBI, but I saw a clear need for this functionality in other places on the platform. I advocated for building it as a centralized technology that chat could later utilize.

Stakeholders

Trio/Quad

Me, Product Designer

Product Manager

Engineering Manager

Research Analyst (Content Producer)

Engineering team

1 frontend engineer

1 full stack

3 backend

Leadership team

VP of Design

Chief Product Officer

Chief Technology Officer

CEO

Users

The project targeted our primary user segment, Corporate Strategy departments. When those departments decide how to approach a market it often comes down to a decision of whether to build the technology, partner with, or to acquire a company. As part of this process, the department has to understand the market, find the right companies, evaluate them, reach out to them then and complete the strategic maneuver.

This project would come into their process at the evaluation step. Here they are trying to understand the different players in the market and which ones would be a good fit for their strategy. It requires an enormous amount of manual research both inside CB Insights, but also tracking down details on Google, monitoring the news etc. Then they would need to synthesize all of that research into a ready deliverable.

Problem Statement

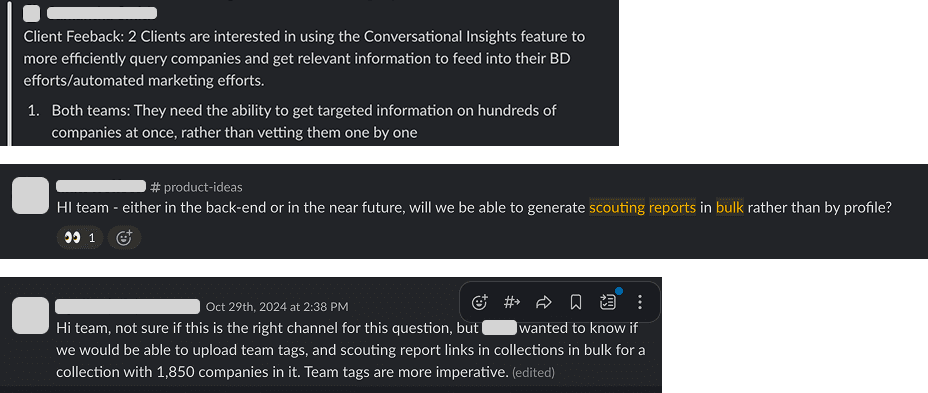

So the team knew that we needed to target that research time. We had already achieved this for individual companies with our Instant Insights AI reporting suite. Where the user could get fully fledged reports on companies that filled in gaps in our data (like Product Offerings, SWOT Analysis etc). Drastically reducing the amount of research our users needed to do. However, we still had a gap in doing this analysis across a large amount of companies. Since the launch of Instant Insights users would reach out with requests to run bulk reporting or compare company reports. It is a messy process that requires the user to stitch together information across spreadsheets, meeting notes, company websites and third party sources.

So we had a few issues to solve:

Reducing the difficulty of doing comparative analysis on companies.

We needed to cover some of the gaps in our data for our clients

Reduce synthesis time of that analysis.

Detangling The Request

Our teams' underlying philosophy in building the chat product had been that ChatCBI should not become the interface of the platform, but that it should be able to utilize the features of the platform to construct it's analysis.

The original request had been to scale this analysis in our LLM chat product, but I knew right away that that wouldn't work very well for multiple reasons:

LLMs struggled to build and retain big lists of companies, adding another feature and effectively doubling the scope of the project.

Our LLM product was designed to give qualitative answers not deliver large data tables.

We wanted bulk analysis to have a dedicated place to live in the platform, and not in the ephemeral form of a chat.

Building an advanced table infrastructure would would require a large feature set that the LLM needed to execute consistently and would add significant load time.

Insert the new advanced functionality into the answer

Create a dedicated prompt for each column

Ensure standardization across the table

We weren't saying that these weren't problems we couldn't solve, but that solving them in a sprint or two would be too ambitious. However, we knew needed to build the infrastructure to conduct AI analysis at scale based on hard coded lists for that to happen.

Competitive Analysis

In order to build evidence for our approach we researched existing solutions in the market. This type of feature already has some presence, but most did not target private company analysis directly.

AlphaSense

AlphaSense is one of our primary competitors and they had pioneered a similar solution in the space. We learned that:

Alphasense had built fully fledged templates that allowed users get started on advanced functionality very quickly.

Their underlying document infrastructure gave them a big advantage for public company data, but this did not extend as well to private company data.

Hebbia

Hebbia is an indirect competitor that acts as an analysis tool where you can insert different data sources.

Despite being a chat first product, Hebbia had to build a dedicated interface to scale analysis speaking to some of the considerations above.

Hebbia always had chat available to manipulate the data table or ask questions about the data table.

Attio

Attio is not a direct competitor, but offers similar functionality for their CRM solutions.

Attio enabled more advanced options for generating an AI analysis column like

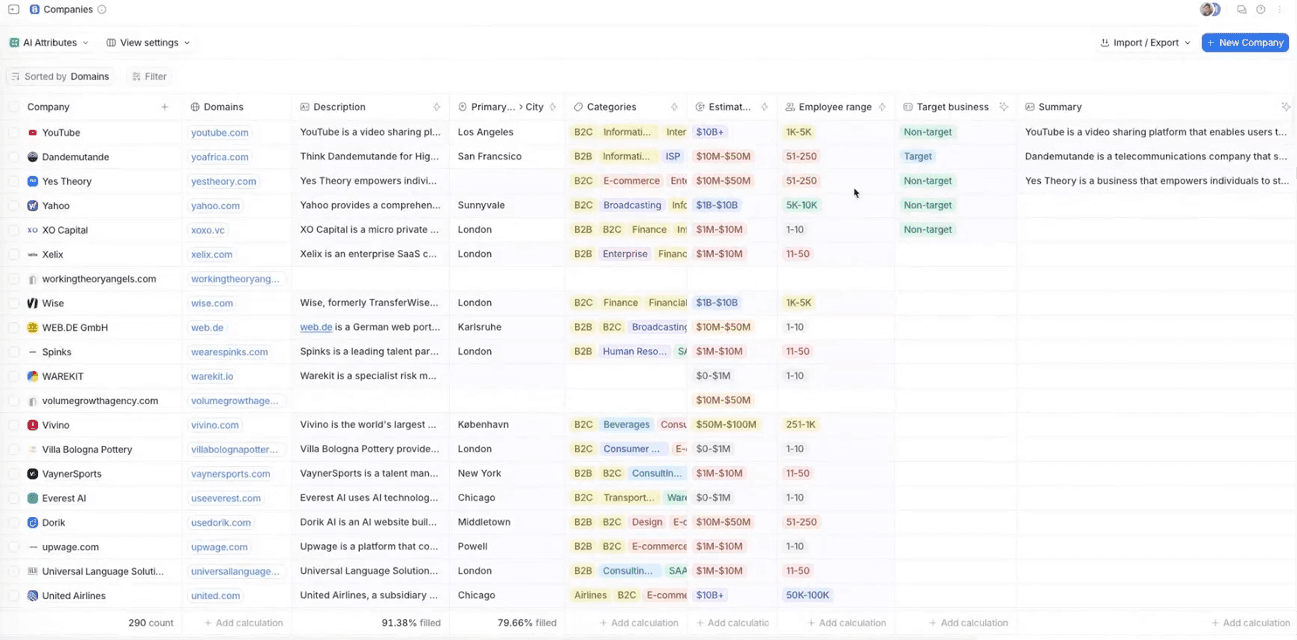

They had a functionality that allowed the LLM to classify companies in a standardized way. Tagging it with things like business catgories or whether the company qualified as a target customer.

Differentiators

After conducting this analysis we saw a few areas where we could significantly differentiate:

With our AI reporting and web informed LLM infrastructure we could provide better answers than our competitors. Especially in areas where structured data was limited (like product descriptions).

We could provide users with sourcing that allowed them to dig deeper where needed.

We could merge AI analysis with hard data. Creating a mixture of the data needed for our users to evaluate companies.

Wireframes

When designing our wireframes, we wanted to build an area of the platform dedicated to tabular analysis. In that space we would allow users to mix our data and customized AI generated columns to be able to conduct a fully fledged analysis. We knew that integrating Chat was too great an ask for the single sprint we had to ship an initial product, so we would stick with developing the core of the feature.

We wanted to focus the feature on analysis, and abstract away all other functionality for the sake of simplicity. We would allow the user to start from an existing list, not have them deal with finding companies, managing the list or monitoring it. Just set up a data table, add AI columns, write in instructions and let AI do the rest.

Another assumption was that our users still would need to create deliverables outside of the platform. So if the user wanted to export the data that was fine, we wanted to automate the gathering of the data not displace Excel or Powerpoint.

Where Should It Live?

We already had a feature for tabular analysis, called Watchlists. The problem was that the feature was trying to do too much. In a watchlist you could:

Source companies

Manage your list

Conduct tabular analysis

Get the latest news

Chart the performance of the list.

In addition watchlist existed across 3 different features of our sidebar, not directly connected (markets, competitors and company trackers). Watchlists were only used by 10-15% of our users month over month, so all the functionality was effectively buried.

Adding in our new feature to watchlist would result in it being placed three steps deep into the platform, making it very unlikely to be successful.

I proposed organizing around centralized features instead of building all the functionality in one space. This would make the core functionality more easily accessible and avoid loading it into one space. So:

Watchlist would be for managing lists.

The newly developed Dashboard would be the space for aggregated analysis of a list.

The feed would be the place you got news for your list and, the new feature, would be the place where you conduct in depth company analysis and comparison.

In addition, we wanted to be adaptable to a future with different data types. While we started with companies, we saw a future where this could be applied to deals, business relationships, earnings calls and so on. That was not supported by watchlist. And in addition the functionality that was being built into watchlists was specific to companies, which made it difficult to pivot to different content types.

After several discussions with the Leadership team, it became clear that they were not willing to invest in a new solution. So we needed to build in watchlists.

User Conversations

We spoke to five users as part of our discovery. All were corporate strategists, and the companies ranged to Fortune 500 companies, to banks to more specialized companies. Our goal was to understand the following:

How users did this work today?

What were the areas where this solution could have the most impact?

Which tools did they use?

How did CB Insights fit into that process?

The conversations varied more in scope than we would have liked, but we learned that:

In addition we received some customer Excel templates and built some case studies to illustrate each clients workflow.

From this analysis we felt that we had strong enough conviction to move forward to high fidelity design.

Users were having trouble keeping tabs on companies. They built spreadsheets and decks based on a wide range of sources. In the end they can rarely gather information fast enough to stay on top of fast moving technology markets.

Projects tend to operate around a similar template that gets slightly adapted for each time

In these templates they are trying to bring together financials, expert intelligence (ie predictive data), customer perspectives and qualitative sources to build a fully formed picture around each company and compare across candidates.

The analysis usually takes place in many different places including CB Insights, but synthesis happens in excel.

High Fidelity

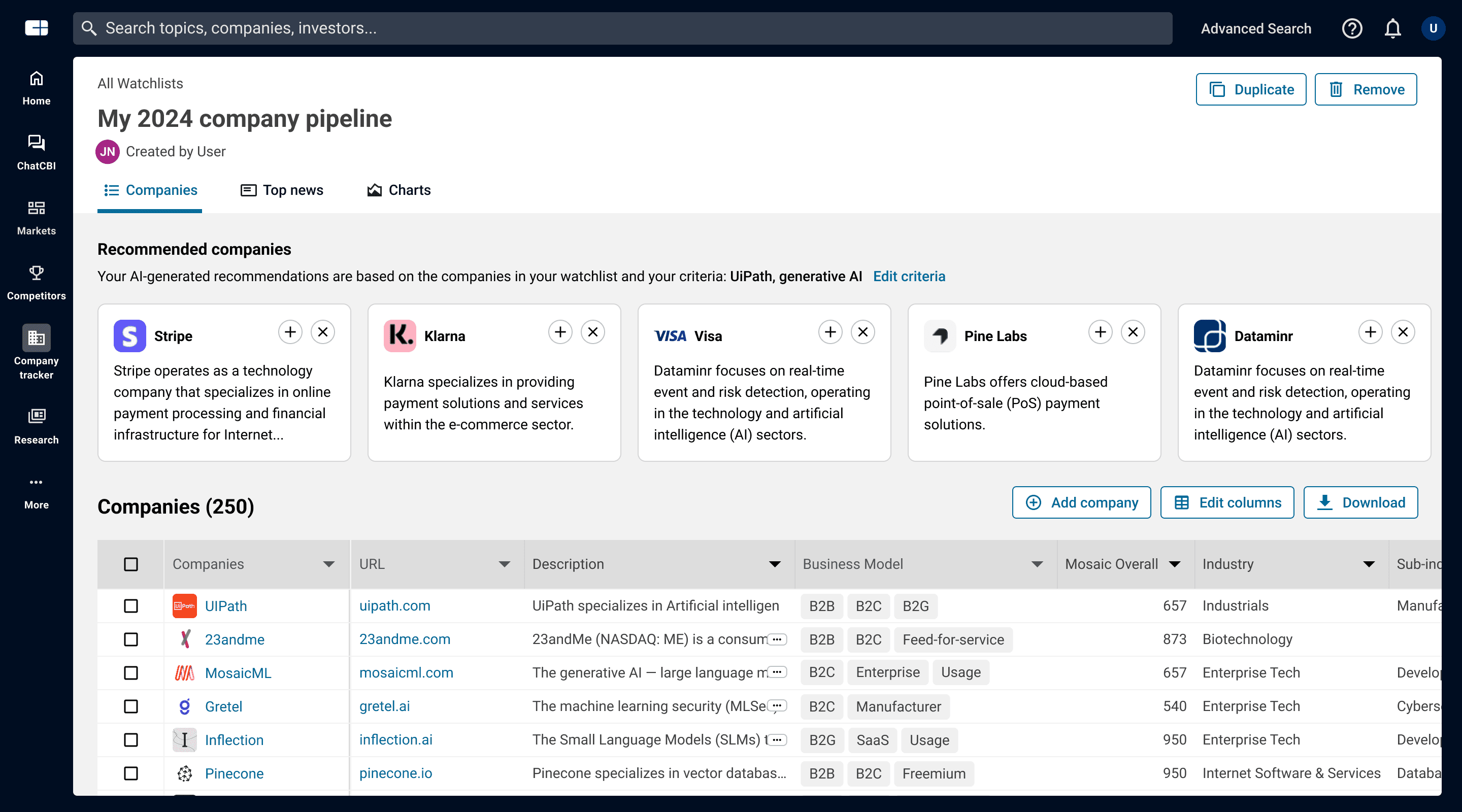

Watchlist Core UI

The first challenge was to simplify the watchlist UI. It had not been invested in since it's launch a year earlier and had a number of issues:

There page was too crowded with different features. It was a company sourcing space, it was a list management space, it was an analysis space, there were news and charts. There was not a clear sense of what the user needed to do on the page.

Much of the screen dedicated to analysis was crowded by different elements claiming vertical space

The two others tabs in the watchlist (Top News & Charts) were used by less then 10% of users of the watchlist (which again was 15% of the total active users). The company recommendations hovered around 15%.

The tables themselves needed a visual lift.

I purposely cut very deep. I tried to remove anything that wasn't related to company analysis. Company sourcing should happen when you create the list or in other features of the platform. The unused tabs had to go to go. Hide as many secondary actions as possible. Reclaim vertical space. Remove the conflicting blue and teal. Focus on building the analysis. Ending in this proposition:

There were countless back and forths. Around 40 options were considered. We had to keep the tabs despite low usage and two news tabs would be added in. Add company needed to be moved back to the table. So we reached a compromise with here:

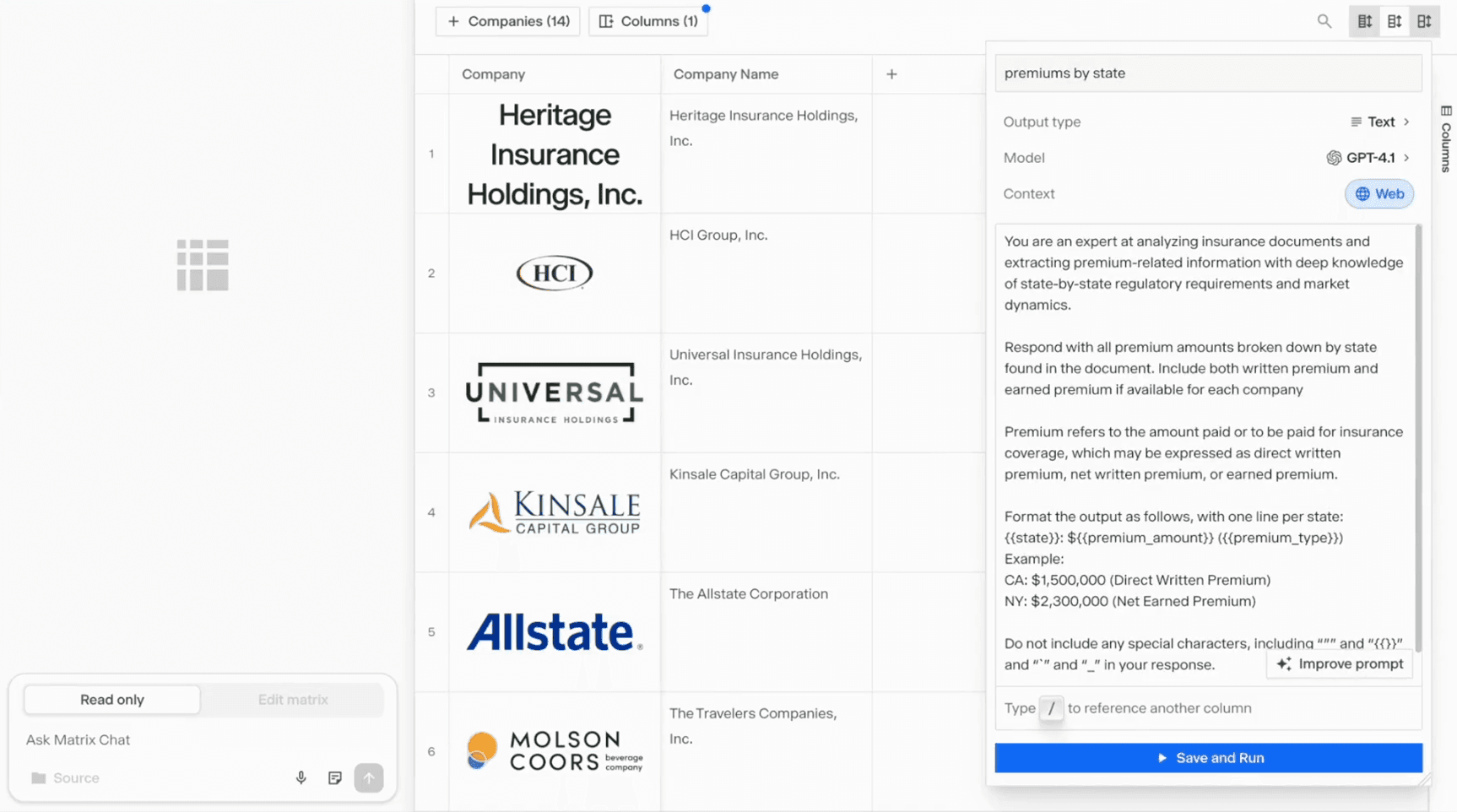

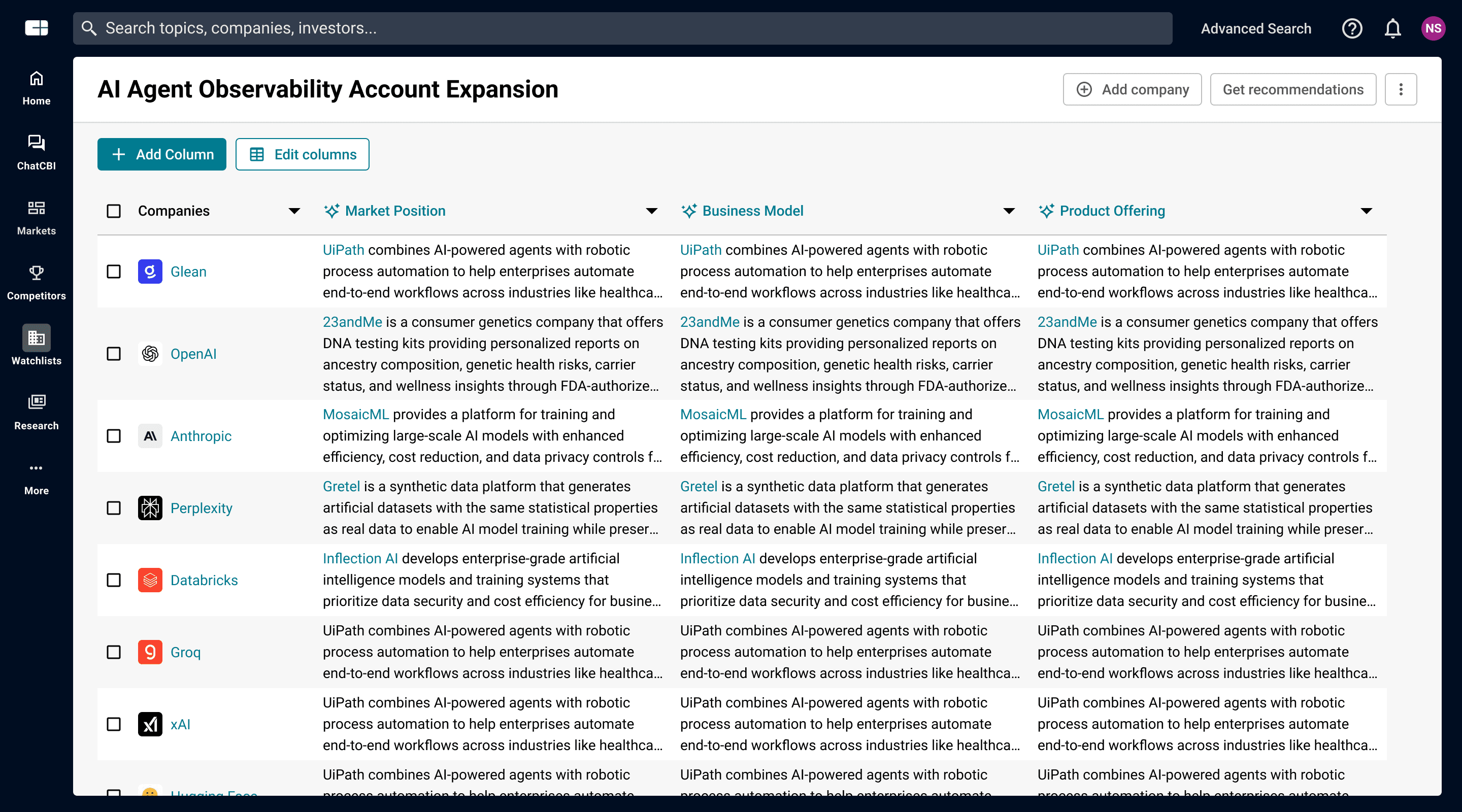

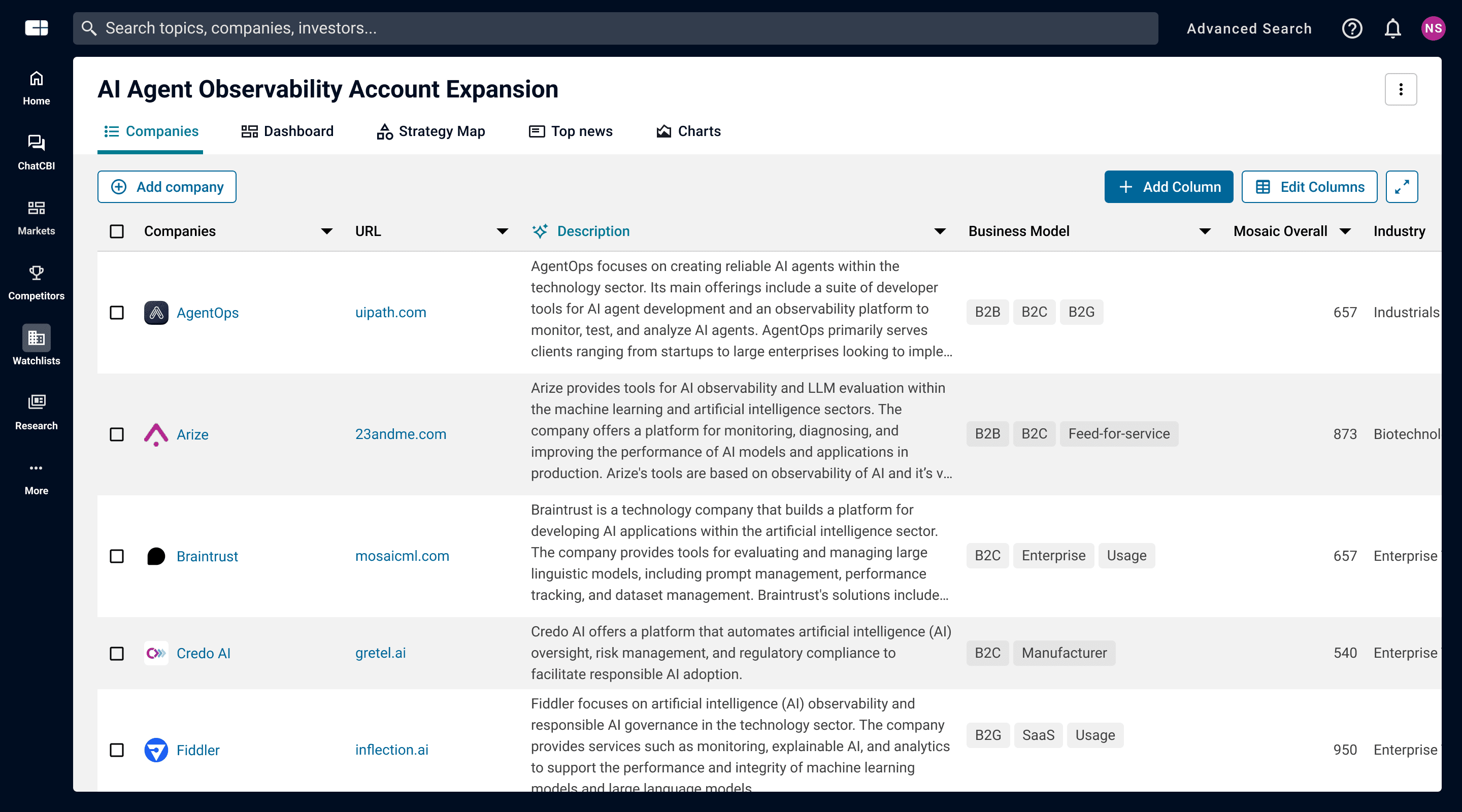

The Insight Column

In making the Add Column the most eye catching part of the platform we wanted to put the functionality front and center. When the user clicked the dropdown we gave som extra context to clarify the difference in functionality between our column types, and leave the dropdown open for additional column types down the line.

We wanted to make it simple as possible from there. Avoiding adding extra fields as possible. On internal review we had gotten a few requests for extra fields like Formatting Instructions or setting a refresh time. But for version one we wanted to stay simple

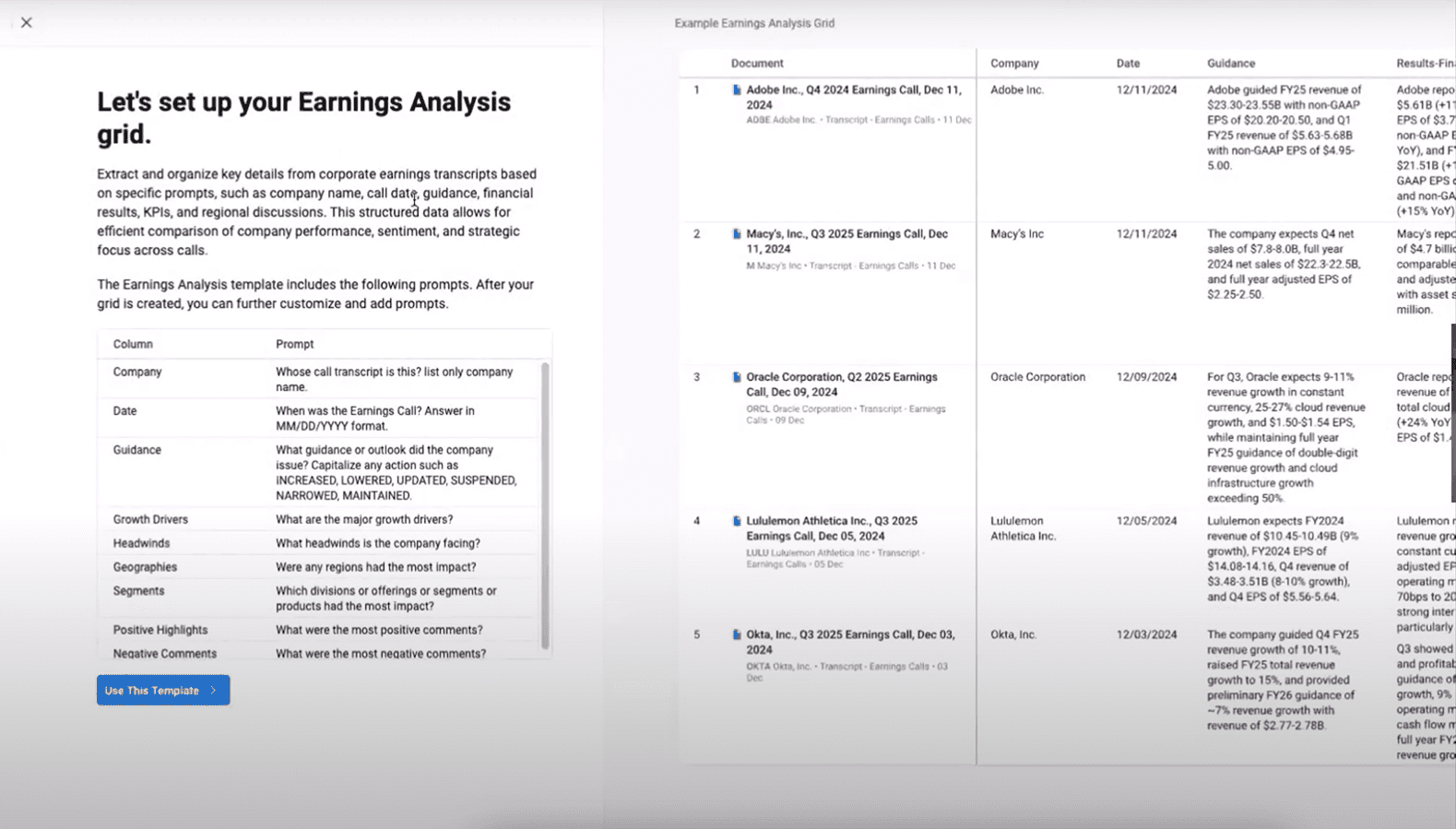

Based on our learnings from user conversations and internal knowledge we built out 6 different strong use cases for the feature. That way the user would get a sense of what they could use the feature for, get an example of a good prompt, but most importantly, we could have the user create valuable columns without any effort on their part.

Once the user had locked in their prompt, we would allow them to get a one cell preview to see if the response looked good. It also worked as a double check to avoid users racking up LLM costs with less valuable columns.

Finally we added sourcing to the LLM generated text allowing the user to verify where the information was being pulled from. We relied on the iconography from our chatbot to get this across.

Alpha Period

During the design period a few concerns had arisen around LLM cost and availability. We knew some users had saved watchlists with 10,000 companies, and adding only one column could rack up a significant bill for LLM usage. In addition, it could completely clog up our LLM utilization and bring downtime for the entire platform. So we needed to load test with a smaller group.

Since LLM products are difficult to usability test without real LLM generated answers and load times, we used this as an opportunity to learn more about usage patterns. I wanted to learn:

Was watchlists lowering the number of users who would access the solution?

Did users understand how to use the solution?

Was it helpful?

Were the suggested columns helpful?

What types of questions were our users?

Results

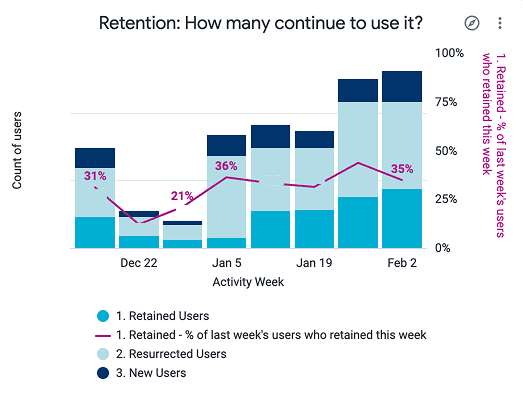

We had 70 users activated for the alpha period. They had been informed that they had access to a new AI tool for comparative analysis, but purposely we had not given much detail. The period ran for four weeks through the month of December, which resulted in lowered usage in the period overall.

Only 10 users used the solution, but their sentiment was extremely positive. It already saved them significant time in company analysis. Half of them used it regularly throughout the period with one users generating close to 60 columns. The key takeaways overall were as follows:

Users who discovered the feature seemed to intuitively know how to use it. It was unclear whether the rest simply didn't encounter the feature, but we saw no indication of users having issues creating the column.

The lack of an export feature was a complete blocker to release. Users needed to be able to continue their analysis in Excel.

Over 60% of the columns created used the default suggestions.

There were no immediate red flags for LLM usage.

Use cases

Beyond the suggested columns, we were able to gather the custom prompts users had used and get a better sense of how the tool might be used. Our research analyst combed through all the queries and answers, to assess for quality as well.

Product Differentiation - not just what products their offer, but how they stand out in the market.

SWOT Analysis - A better understanding of how the company performed in the market.

Risk Assessments - Risk factors

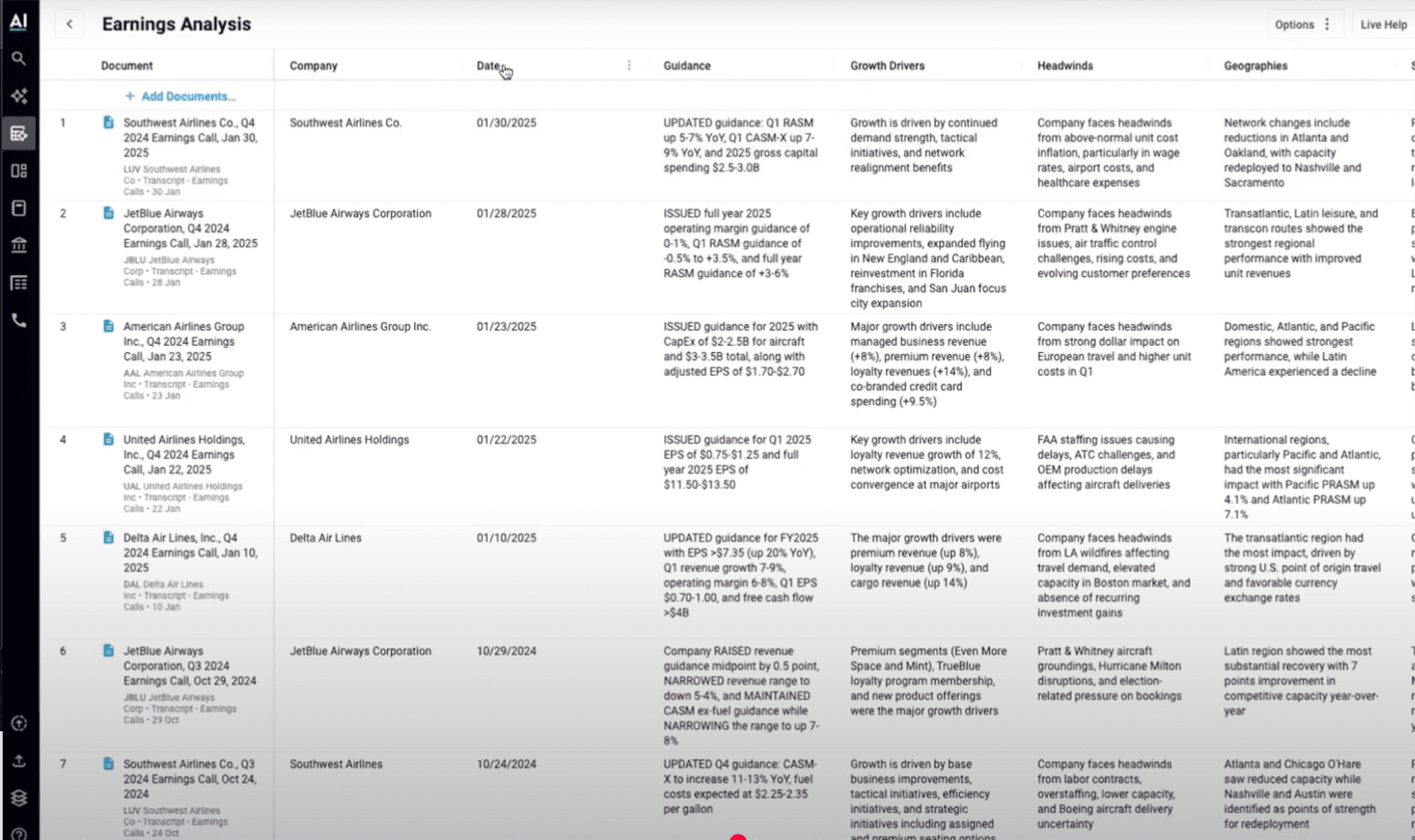

Earnings call related questions - Using the data source to better understand how public companies are thinking about private markets.

M&A Analysis - A highlevel assessment of M&A fit for the company

Hiring and People - Understanding management teams and hiring trends.

Future Vision

After we had established that the insight columns were valuable, we wanted to introduce Templates. We had certain jobs that our platforms could help users achieve and these tended to be similar across user segments. Working with our research analyst, we came up with three initial templates that would essentially do most of the work for some of our core jobs to be done. That would leave less of a pressure on users to create individual columns, but start with most of the work already done.

In addition, we we knew before the project even started that the types of columns we could provide would expand. Customers had consistently requested to add an editable column where they could add their personal notes. On the other hand, we were actively considering adding a classification column, where the user could set up a set of criteria and classify companies based on those criteria.

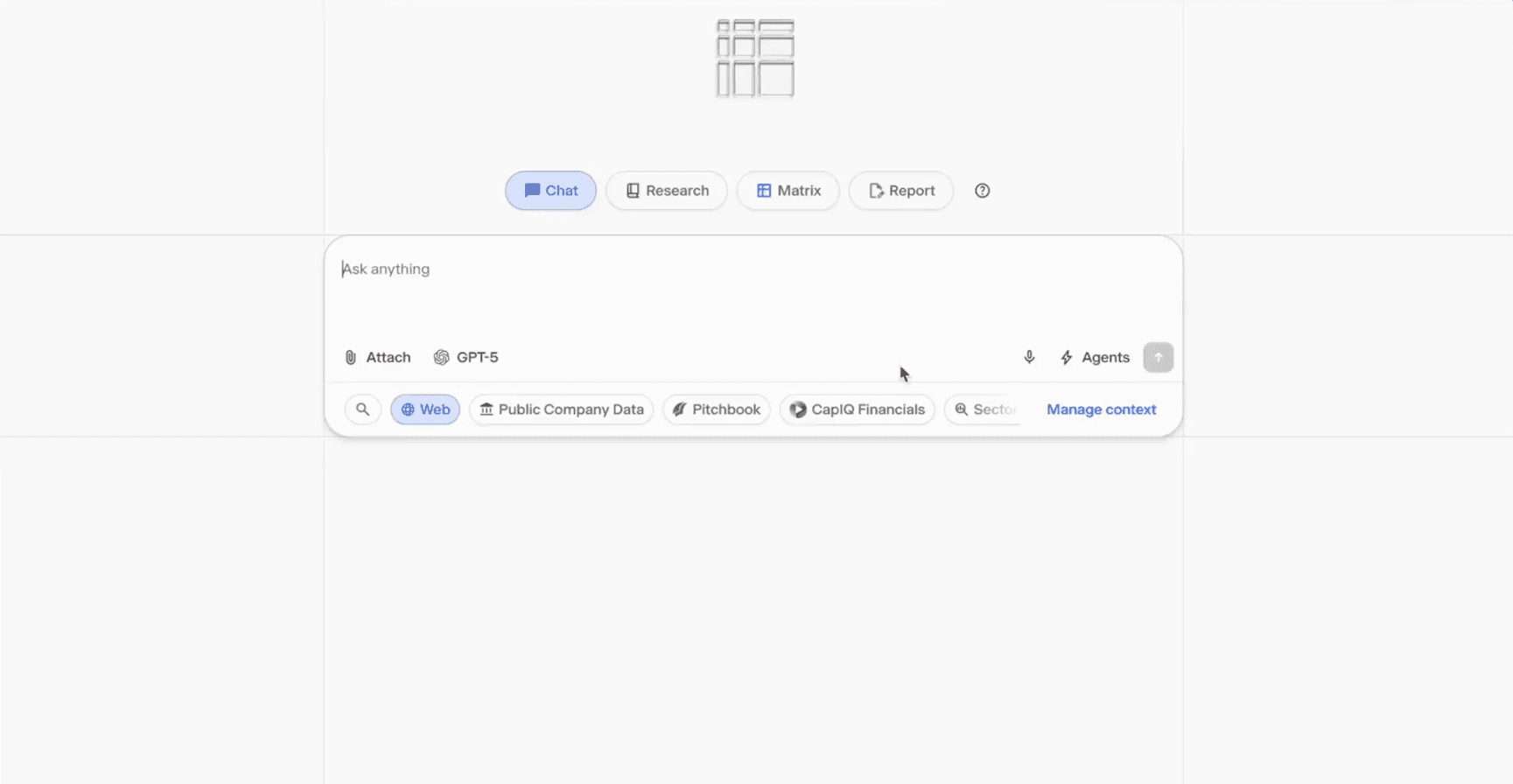

The final piece of the puzzle would be for our chat to utilize the column infrastructure we had built. Utilizing it to build custom templates for users based on their requests allowing users to go beyond the standardized templates. The final step would be to have chat utilize the infrastructure as part of it's answers allowing for more advanced use cases in answers, but also allowing seamless transitions between chat and watchlists.

Release Results